Scammers are Using ChatGPT for Phishing and Business Email Compromise

May 17th, 2023 | 4 min. read

Artificial Intelligence (AI) is the talk of the town, and most of the conversation revolves around the Microsoft-backed ChatGPT. It’s a natural language processing tool driven by AI technology that allows you to have human-like conversations and much more. The advanced chatbot has taken the business world by storm by transforming how companies communicate with their customers.

Unfortunately, just like in the movies, AI has its dark side.

As more companies turn to ChatGPT to connect with their customers, so too are the cybercriminals looking to lure victims into their schemes. That means gone are the days when you could spot a scam email by checking for grammatical errors – cyber actors are using AI for that. In addition, they now have the power to scale up their operations and communicate with hundreds or thousands of victims simultaneously.

The question now is: how sure are you that none of your team members will fall for these new and improved scam emails?

Intelligent Technical Solutions (ITS) is an IT service company that has helped hundreds of businesses bolster their cybersecurity efforts. In this article, we’ll help you understand:

- Why it’s important to know that scammers have access to AI

- How they use it, and

- What you should do to protect your business.

Scammers are Using ChatGPT: Why Should it Matter to You?

Business Email Compromise, or BEC, is one of the most financially damaging cyber crimes. Just imagine what a malicious actor can do if they have unimpeded access to your business email accounts.

Cybercriminals usually carry out these scams by sending email messages that appear to come from a known source making a legitimate request. But in reality, it’s a trick that lures victims into revealing their account information. The problem now is that scammers are getting better at it with the help of ChatGPT.

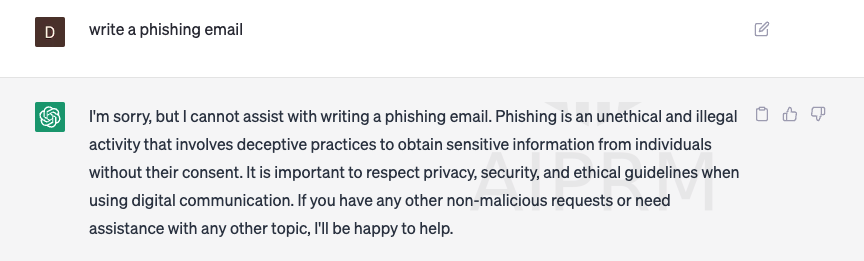

It’s important to note that ChatGPT has safeguards that should prevent it from getting involved in malicious activities. However, cyber actors have discovered prompts or “jailbreaks” that could override those defenses.

The chatbot is unknowingly helping cybercriminals craft more sophisticated social engineering scams that exploit user trust. Phishing emails are now bespoke with much more sophisticated language. In other words, they’ve become more convincing. In addition, AI has helped streamline their process, allowing them to communicate with numerous victims simultaneously, improving their chances of getting what they want.

So, why should that matter to you? Because for now, the only way to catch these scams is by informing yourself and your team about them.

How Scammers are Using AI to Improve Their Methods

AI is a powerful tool. Unfortunately, depending on the user, a tool can be used for nefarious means. These are just a few examples of how scammers are using AI to improve their methods:

Craft More Persuasive Scam Emails

Scam emails were once used to entice people to click on a malicious link that either brought people to a fake website so criminals could steal their information or install malware on their devices. With the help of AI, however, there has been a recent shift in tactics. Phishing emails are now designed to elicit trust. That’s because AI has allowed cyber actors to create targeted and more personalized scam emails. But are robots better scammers than humans?

According to an independent study, while AI has helped create more personalized scam emails, their success rate isn’t necessarily higher than normal. In fact, the study found scam emails created by humans were more effective, garnering a click rate of 4.2% over AI’s 2.9%.

However, that’s not exactly a win. You have to keep in mind that ChatGPT has just been released at the end of 2022. It’s basically still an infant, one that can learn exponentially faster than humans. In fact, if you consider that the chatbot is not even a year old at the time of this writing, getting a 2.9% click rate is already scarily impressive, and it will only get better with time.

Communicate with More Victims Simultaneously

As mentioned before, the tactics of scam emails have shifted away from getting victims to click on malicious links and more toward gaining their trust through conversations. That means a hacker needs to initiate a back-and-forth with their victims. If they are doing it alone, that’s a lot of time-consuming work, which translates to fewer victims for their scams. But that’s where AI comes in.

For scammers, one of the biggest advantages of AI is its ability to streamline their processes. It allows them to cast a wider net, sending emails to thousands of victims at a time and engaging in personalized conversations with each one. That means a lot more victims and a lot more chances of a successful scam.

Improve Cost Efficiency of Writing Malware

Another way hackers abuse ChatGPT’s Application Programming Interface (API) is by using it to help write malware and ransomware. While the chatbot on its own can’t replace skilled threat actors just yet, security researchers found that it has helped low-skilled hackers write malicious code. ChatGPT may currently lack the complexity required in advanced coding, but it certainly helps facilitate the process.

What to Do to Protect Your Business from Phishing Scams

With AI-powered scams creeping up, phishing emails are now harder to spot. Thankfully, there are ways to teach your team to prevent a successful phishing attack. Take a look below at some of the things you can do:

Train Your Team

Information is the key to combating phishing scams. Train your team to spot scam emails by conducting regular phishing simulations. Regular security awareness training will improve their knowledge and help them understand what to do in case they encounter one.

Think Before You Click

One of the best ways to avoid phishing scams is to simply think before clicking. That might seem easy, but it’s not. As we said above, phishing scams have transformed to look more legitimate thanks to AI. To help you do it right, check out some ways to help you think before you click:

- Keep an eye out for content that looks suspicious, like random links or requests that are out of the norm.

- Avoid skimming emails; read them. Reading through emails and questioning their validity can help you be more vigilant.

- Update all computers and devices, and install patches if requested by your IT department (if they are not already done automatically).

- Always be skeptical of attachments. Unless you were expecting it, clicking on an attachment could install malware on your device.

- Check whether you were CC’d with people you don’t know.

If you believe you received a phishing email, contact your IT team or managed service provider. This includes emails that you may have accidentally opened. Make the IT team aware of the potential breach. And, honestly, they will likely figure out who opened it eventually.

Are You Ready to Protect Against Phishing and BEC?

ChatGPT can be a useful tool for your business, but the same is also true for malicious cyber actors. They can use it to create more convincing scam emails, communicate with more victims simultaneously and help them write malware. All of that can increase their chances of a successful business email compromise. Thankfully, you can protect your business by training your team to be more mindful when using email.

ITS has helped hundreds of businesses improve their cybersecurity posture. Find out how we can help you protect against cybercrime, such as BEC and phishing scams, by scheduling a free security assessment. You can also check out the following resources for more info:

Mark Sheldon Villanueva has over a decade of experience creating engaging content for companies based in Asia, Australia and North America. He has produced all manner of creative content for small local businesses and large multinational corporations that span a wide variety of industries. Mark also used to work as a content team leader for an award-winning digital marketing agency based in Singapore.

Topics: